About Us

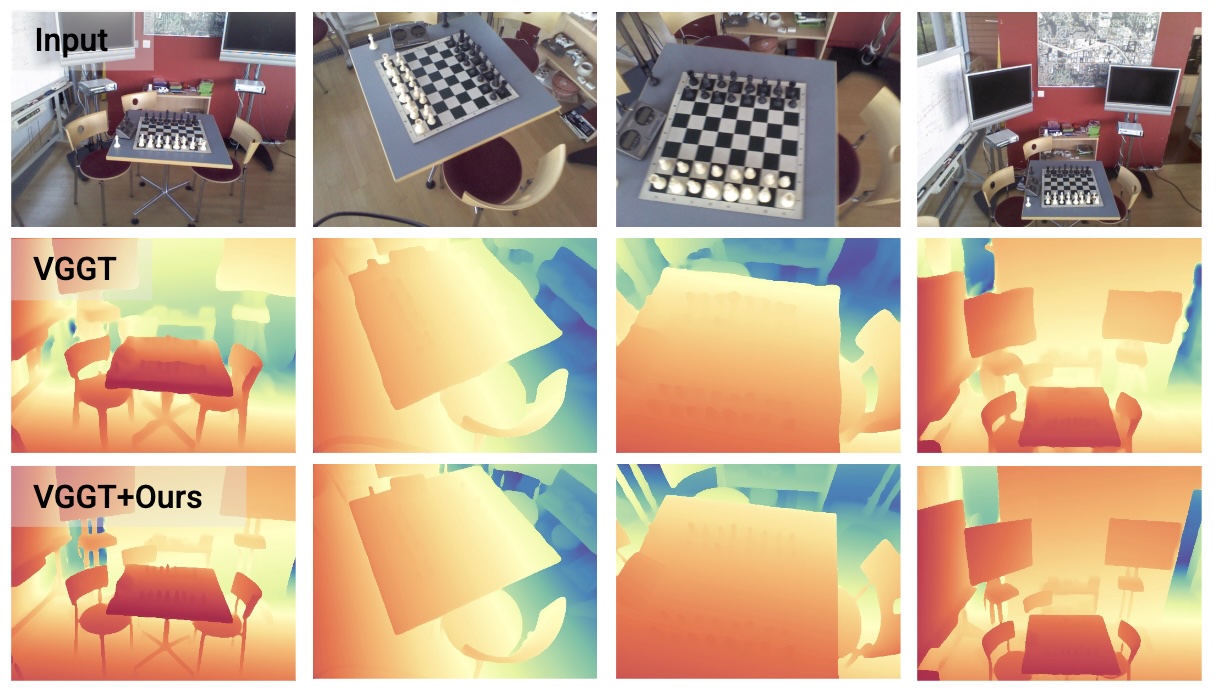

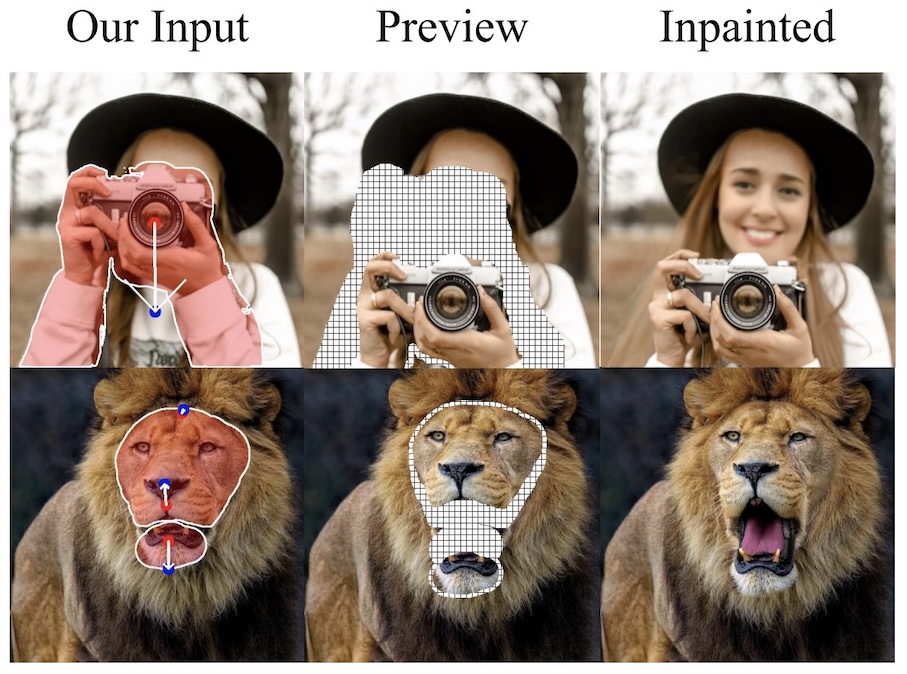

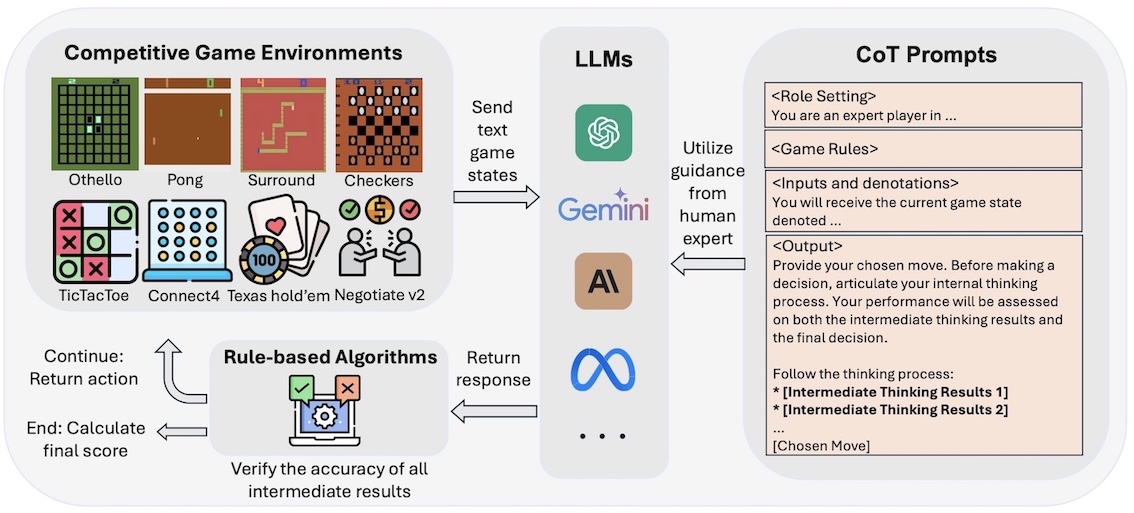

Visual AI Lab (VAIL) is a research group directed by Prof. Kai Han, working on computer vision, machine learning, and artificial intelligence, at School of Computing and Data Science, The University of Hong Kong. The overarching goal of our research is to achieve principled and comprehensive visual understanding, close the intelligence gap between machines and humans, and build reliable AI systems for open-world use. Our current research focuses on open-world learning, 3D vision, generative AI, foundation models and their relevant fields. Our goal is to achieve principled and comprehensive visual understanding, close the intelligence gap between machines and humans, and build reliable AI systems for open-world use.

🚩 Openings:

(1) PhD students: We are always looking for strong students to work on exciting research problems (☞ fellowships and scholarships).

(2) Postdocs: Positions in computer vision and deep learning are available.

(3) HKU Summer Research Programme 2026 (☞ details. Deadline: Feb 9, 2026), with the possiblity of conditional PhD offer of 2027 intake with HKU-PS scholarship.

(4) HKU CDS Research Internship Programme (☞ details. Deadline: May 31, 2026; or once all places are filled, normally earlier), with the possiblity of conditional PhD offer of 2027 intake.

Please drop Prof. Kai Han an email with your resume if you are interested in working with us.